Builders investors and innovators on the state of AI

SuperAI PULSE 2025

Boston Dynamics / The AI Institute

.webp)

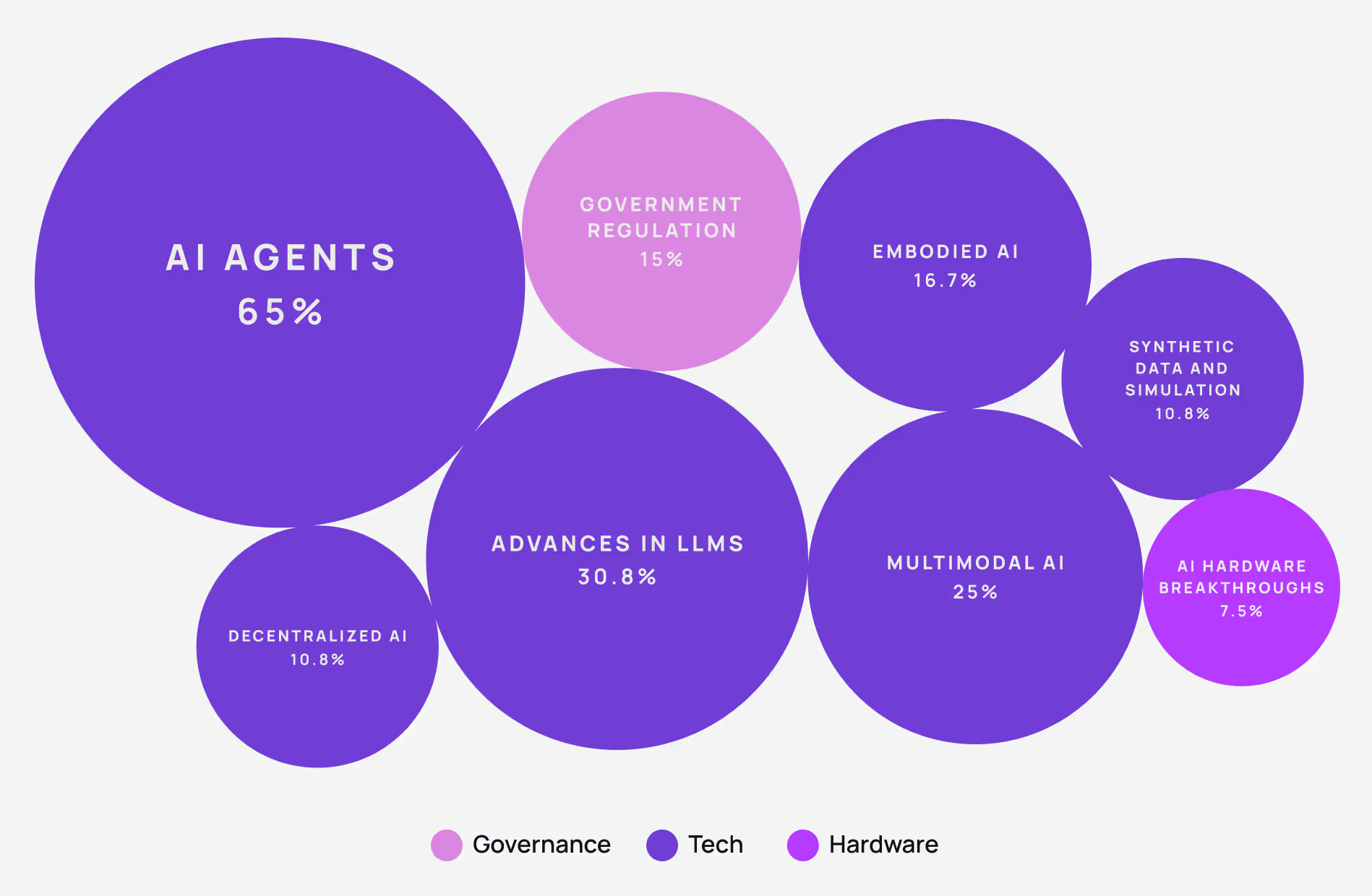

Q1: Which AI developments will have the biggest impact in 2025?

The pace of artificial intelligence (AI) innovation is accelerating – but not all emerging AI technologies are seen as equally transformative. To understand which trends truly matter, we surveyed over 150 AI founders, researchers, investors, and engineers. The results reveal where attention, investment, and deployment are heading in 2025.

| Development | Responses (%) |

|---|---|

| AI Agents | 65% |

| Advances in LLMs | 30.8% |

| Multimodal AI | 25% |

| Embodied AI | 16.7% |

| Government Regulation | 15% |

| Decentralized AI | 10.8% |

| Synthetic Data & Simulation | 10.8% |

| AI Hardware Breakthroughs | 7.5% |

AI Agents were cited by 65% of respondents — more than twice the next most popular trend.

This overwhelming consensus around AI agents highlights a growing demand for autonomous, goal-directed AI tools that can act and reason across applications.

As companies race to integrate agents into their products and APIs, this trend signals a shift from passive models to workflow-integrated AI systems.

Q2: Which AI trend is most overhyped right now?

As the AI sector matures, practitioners are becoming more discerning about which trends deserve attention. Our survey of industry leaders reveals which developments they view as overhyped in 2025.

Over 60% of respondents identified AGI, coder replacement, and AI agents as the most overhyped trends — a clear signal of growing fatigue with speculative narratives.

| Trend | Responses (%) |

|---|---|

| AGI timelines | 23.3% |

| AI replacing coders | 20% |

| AI agents | 19.2% |

| Open-source LLMs | 15% |

| AI and Blockchain | 11.7% |

| AI art/music | 7.5% |

| Alignment research | 3.3% |

AI Agents: simultaneously the most promising — and the most polarising development in 2025.

The results reveal a sharp divide between the AI trends that drive headlines and those that deliver real-world value. Despite dominating public discourse, artificial general intelligence (AGI), AI agents, and “AI replacing developers” were flagged as the most inflated.

This highlights a pragmatic shift within the AI community – one that favours tangible progress over speculation.

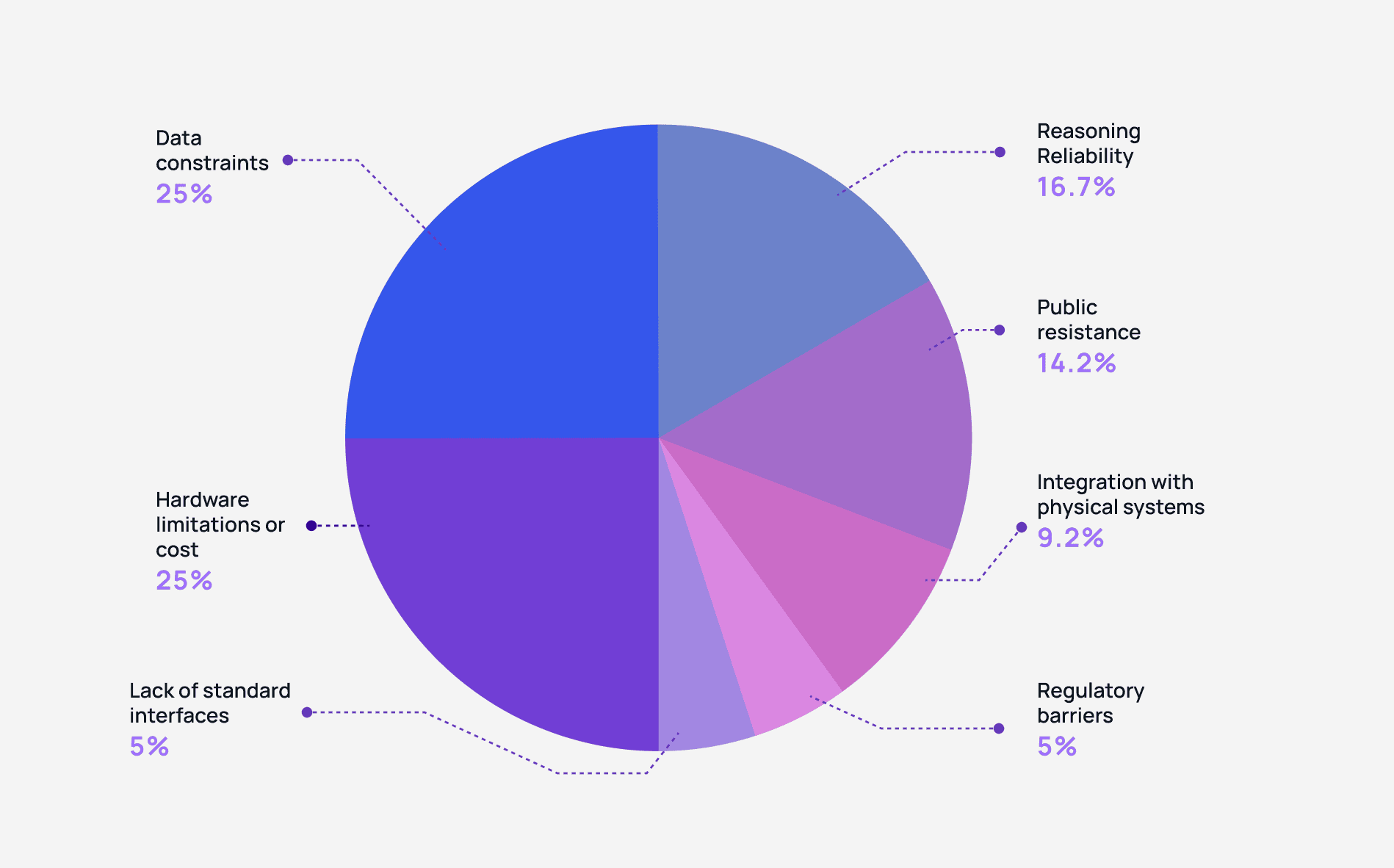

Q3: What’s the biggest blocker to real-world AI deployment today?

Despite breakthroughs in large language models (LLMs) and generative AI, many artificial intelligence (AI) teams continue to struggle with one key challenge: real-world deployment. To uncover what’s holding the field back, we asked respondents to identify the top obstacles to operationalising AI in production environments.

Half of the industry cites infrastructure limits – hardware and data – as the primary bottlenecks, signalling that AI progress is now outpacing the legacy systems that support it.

| Blocker | Responses (%) |

|---|---|

| Data constraints (quality, access, privacy) | 25% |

| Hardware limitations or cost | 25% |

| Reasoning reliability | 16.7% |

| Public resistance or misunderstanding | 14.2% |

| Integration with physical systems | 9.2% |

| Regulatory barriers | 5% |

| Lack of standard interfaces or APIs | 5% |

The results are clear: AI infrastructure – not model architecture – is now the primary constraint. A full 50% of respondents cite data or compute as the biggest barrier to scalable AI deployment.

Concerns about model reliability and societal acceptance trail behind, highlighting how operational friction is overtaking technical feasibility.

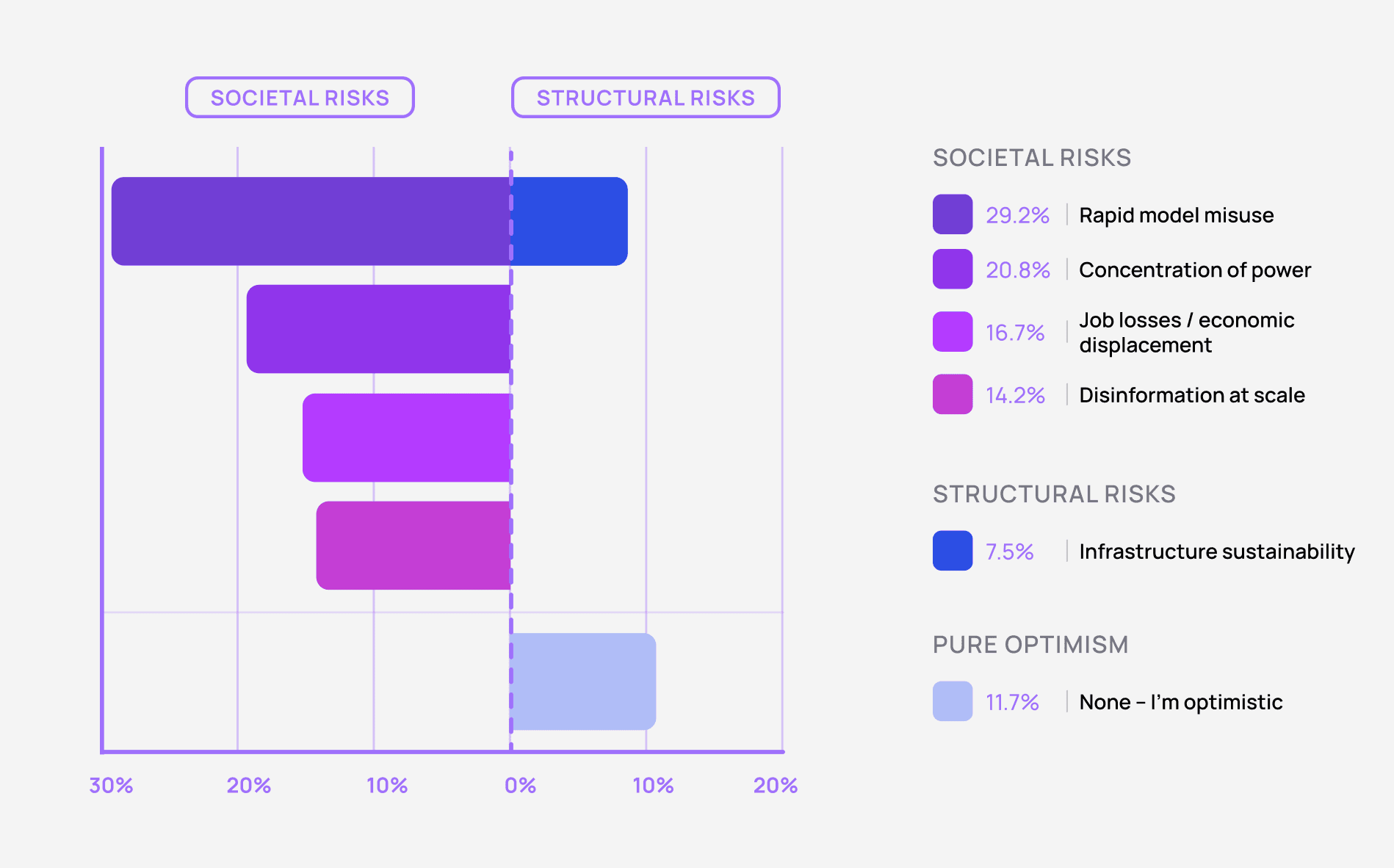

Q4: What is your biggest concern about near-term AI deployment?

While the long-term risks of artificial general intelligence dominate headlines, the real concerns for AI builders today are much more immediate. We asked industry leaders what worries them most as AI becomes increasingly integrated into real-world applications.

| Concern | Responses (%) |

|---|---|

| Rapid model misuse (e.g. scams, deepfakes) | 29.2% |

| Concentration of power (OpenAI, Google, etc.) | 20.8% |

| Job losses / economic displacement | 16.7% |

| Disinformation at scale | 14.2% |

| Infrastructure sustainability (compute, energy) | 7.5% |

| None – I’m optimistic | 11.7% |

The data paints a clear picture: the dominant worries among practitioners today centre on societal consequences, not technical limitations. Nearly 80% of respondents selected issues like misuse of AI models, unequal power distribution, or economic disruption as their top concerns.

Far fewer flagged infrastructure, energy consumption, or long-term existential threats.

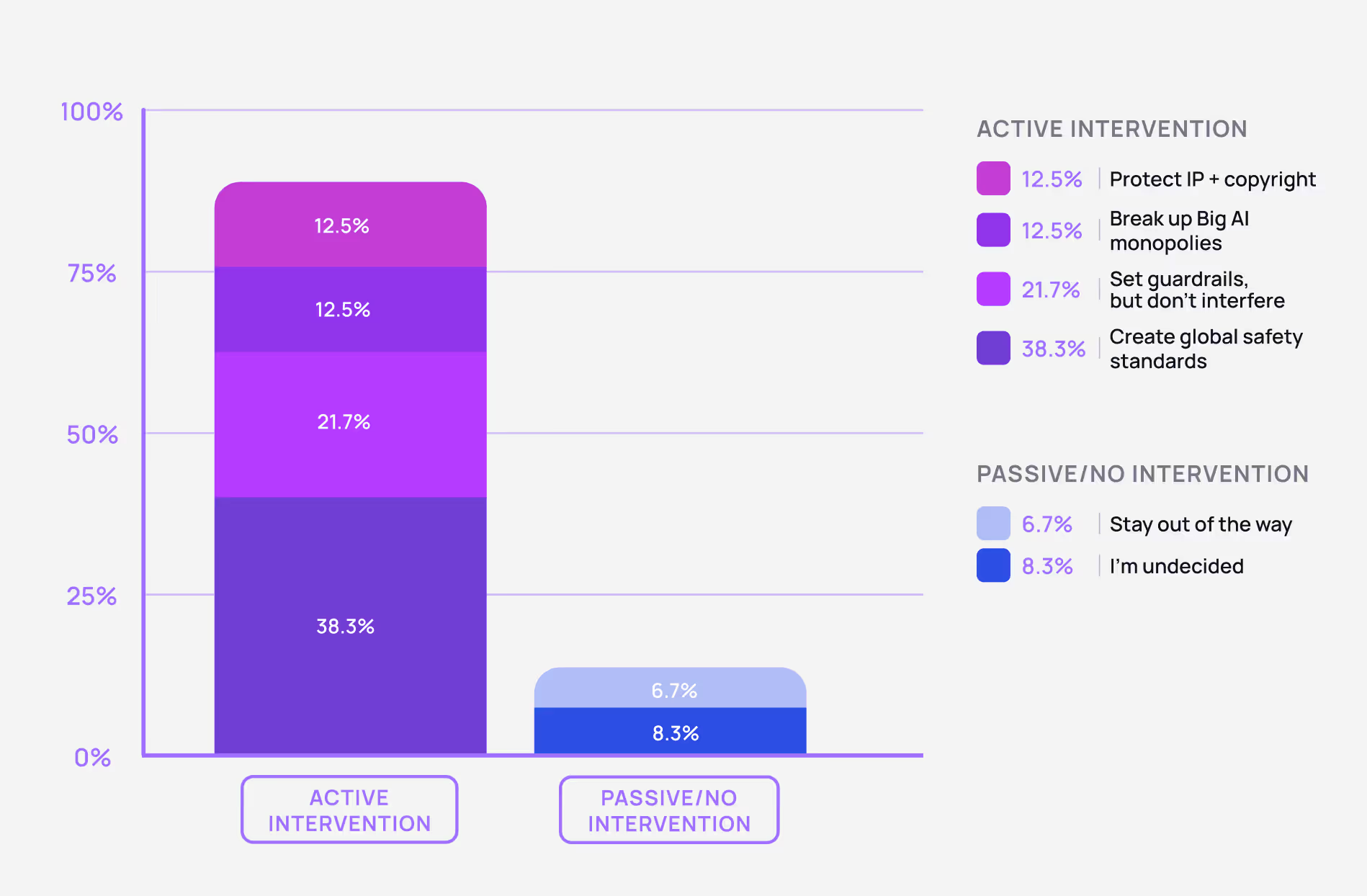

Q5: What role should government play in AI development?

As AI systems grow more capable and deeply embedded in everyday life, the call for AI regulation has reached a critical inflection point. We asked respondents what role they believe governments should play in shaping the development, deployment, and oversight of AI technologies.

| Role | Responses (%) |

|---|---|

| Create global safety standards | 38.3% |

| Set guardrails, but don’t interfere | 21.7% |

| Break up Big AI monopolies | 12.5% |

| Protect IP + copyright | 12.5% |

| I’m undecided | 8.3% |

| Stay out of the way | 6.7% |

The overwhelming consensus is that the era of hands-off AI development is coming to an end.

A full 85% of respondents support direct government involvement – whether through establishing international AI safety standards, introducing policy guardrails, breaking up monopolistic AI platforms, or enforcing intellectual property rights.

Q6: In your work, what has changed the most in the last 6 months?

From toolchains to team priorities, the last six months have brought dramatic shifts in how AI professionals work. We asked respondents what’s changed most in their daily workflows – and the results reveal clear trends in two key areas.

| Change | Responses (%) |

|---|---|

| Tooling has improved massively | 40.8% |

| Customer expectations of AI have increased | 29.2% |

| My workflow hasn’t changed much | 10% |

| Regulation/legal concerns have grown | 10% |

| We’re still figuring out what to build | 10% |

The overwhelming trend is that tooling and expectations are advancing faster than everything else. Over 70% of respondents cite either vastly improved AI infrastructure or increased pressure from AI-literate customers.

In contrast, regulatory hurdles or internal indecision barely register.

Q7: Which of these best describes your current attitude towards AI?

From bold experimentation to measured pragmatism, we then asked respondents to describe their current mindset toward artificial intelligence. The responses reveal a field grounded in strategic execution – with a strong appetite for progress, and little patience for hype.

| Attitude | Responses (%) |

|---|---|

| Strategic: AI is a tool, not the point | 55% |

| Bold: Build fast, break things | 28.3% |

| Overwhelmed: It’s all moving too fast | 8.3% |

| Cautious: Let’s not rush it | 6.7% |

| Skeptical: We’ve been here before | 1.7% |

83% of respondents describe their current approach to AI as either “Strategic” or “Bold” – underscoring a strong shift from uncertainty to pragmatic deployment.

Fewer than 10% remain cautious or sceptical, reinforcing the view that artificial intelligence is now seen as a core capability, not a speculative risk.

The signals behind the noise

The future of artificial intelligence isn’t speculative – it’s already taking shape in the hands of those building it

.

From infrastructure challenges to shifting workflows, from governance demands to divided sentiment on AI agents, the SuperAI PULSE 2025 survey captures the real-time perspectives of AI founders, investors, researchers, and operators across the globe.

These aren’t projections. They’re the frontlines.

Want the full picture?

Download the complete SuperAI PULSE 2025 report, including shareable charts, expert commentary, and exclusive insights from 100+ leaders driving the AI economy.

Why it matters:

- AI trend forecasting is no longer guesswork – these insights map where real-world deployment is heading

- Use it to benchmark your strategy, align product roadmaps, or back up your next boardroom discussion

- Covers AI Agents, governance policy, LLMs, infrastructure bottlenecks, and attitudinal shifts across the ecosystem

Prefer live insight over static charts?

Join us in person at SuperAI Singapore, June 10-11, 2026, where these findings turn into panels, debates, and demos that go far beyond the slide deck.